This past week I cloned myself.

Well, I cloned my voice.

I’ve been experimenting with ElevenLabs’ voice cloning and conversational AI agents. It’s been a wild ride, and I’m excited to tell you about it.

What is ElevenLabs?

ElevenLabs is an AI company that is focused on producing high-quality audio for a wide range of applications. Perhaps the most obvious use case is automated customer service agents, but the potential extends far beyond that into other areas like audiobook narration, dubbing videos into foreign languages, or producing virtual podcasts.

When I first started playing with the website, I was blown away by the quality of the virtual assistant built into the website. This went a long way in demonstrating for me the power of a human-sounding AI-powered assistant.

Check out the brief video below where the virtual assistant answers some of my questions and helps me navigate around the website.

Kind of amazing, right?

Not only did I find the quality of the voice drawing me in, but I appreciated that the this AI agent was able to navigate around the website for me, bringing me to the exact page that I needed.

This interaction gave me a glimpse of the near future where AI-agents are integrated into websites and software, helping to troubleshoot problems or show us exactly where to locate those hard-to-find settings. That feels really powerful to me.

Voice Cloning

One of the most amazing features of ElevenLabs is the ability to clone a user’s voice. I wanted to give this a try, so I purchased the “Starter Plan” (for about $11 per month), which provided me with access to “instant voice cloning.”

The process required me to upload a minimum of 10 seconds of recorded speech, but encouraged me to record up to 2 minutes for the best results.

I was somewhat impressed by the results, but it still sounded robotic. I wanted to explore the full capability of ElevenLabs tools, so I upgraded to the “Creator Plan” ($22/month) to unlock “professional voice cloning.”

The Professional Voice Clone requires at least 30 minutes of recorded audio (which can be spoken directly into the platform or taken from previous audio / video recordings). Users can upload several hours of recorded content to get the best results.

For my purposes, I uploaded about 40 minutes of narration and videos. The result was a much improved copy of my voice.

Play the audio clip below to hear a comparison between the instant voice clone, the professional voice clone, and my actual recorded voice.

To be honest, the professional voice clone is pretty impressive. While there is some odd pronunciations and curious choices in word emphasis, I think it would most of my friends and family would agree that it sounds pretty much just like me.

What would you do with a voice clone?

Well, the first thing I did was show my kids. They thought it was pretty cool. We had lots of fun playing with the “Text to Speech” tool, which lets you type anything and then listen to my voice saying it just a few moments later.

Naturally, each of them wanted to type something ridiculous for their father to say. (Let’s just say that there was a lot of potty humor involved.)

But I had a more noble purpose in mind. As an online astronomy professor, I wanted to use my custom voice clone to power an AI chat bot so that my students could speak with “me” about questions they may have in the course.

I have no current intentions of deploying such a tool to all my students, but I wanted to test the current technology to see how it worked and how difficult it was to set up.

Well, ElevenLabs made it surprisingly easy with their “Conversational AI Agents.”

Conversational AI Agents

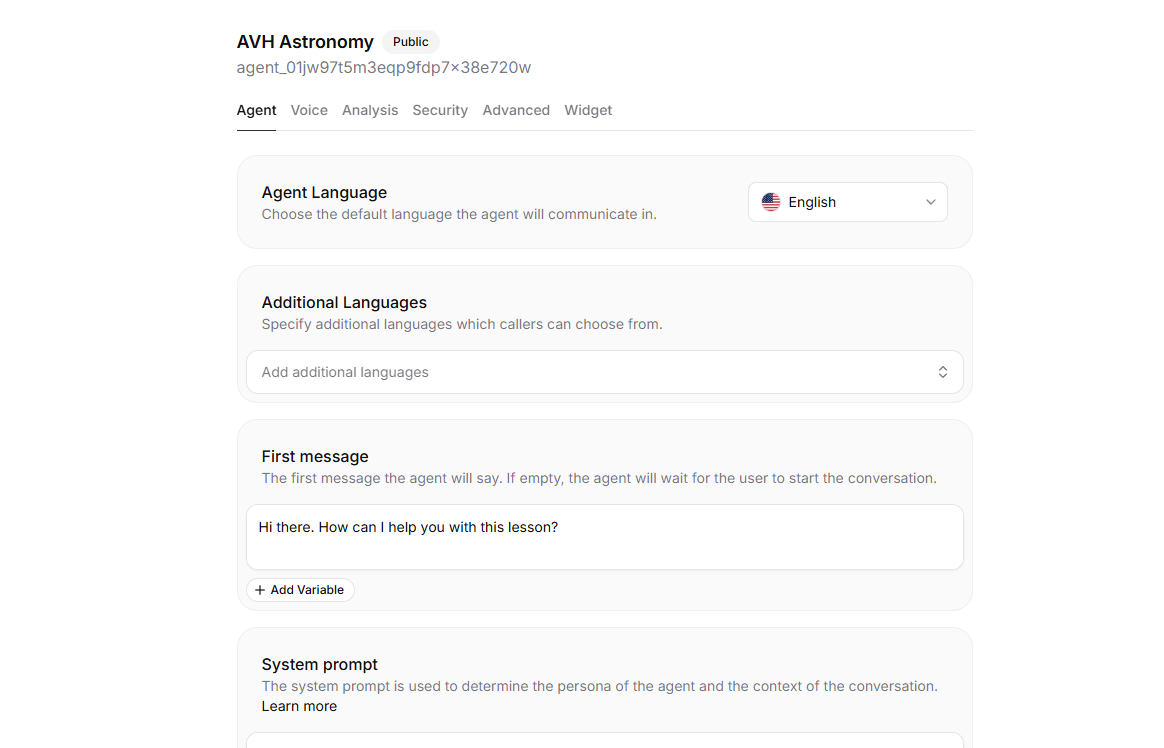

With ElevenLabs tools, it is trivially easy to create a new AI agent. After cloning your voice, you simply select it as the voice of the agent and write a system prompt that describes how you would like the agent to behave.

You can customize a wide range of settings, but if you use the defaults, then you can launch your AI agent in a matter of seconds with just a few clicks.

In my case, I wanted the AI agent to use my picture for the profile picture and have specific system prompts and knowledge base so that it could provide useful information to students.

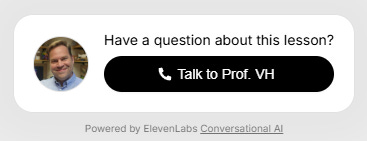

Using a single-line embed code, you can add a small widget to any webpage, which will initiate a conversation with the agent.

Testing with Family and Friends

Before I was going to test this with students in the wild, I wanted a more controlled experiment. I shared a link to my AI agent to several of my friends and family.

For this version of the AI agent, I primed the system prompt with a few specific details of each person who would be speaking with the agent. That way, when they told the agent their name, it would respond (in my voice, remember) with a familiar greeting and a specific question targeting their interests.

My system prompt included text like: “You may talk to my brother Ryan. He is a high school English teacher. He also likes to explore the use of AI in his teaching, so you can ask him about that.”

I texted my friends and family the link and then waited.

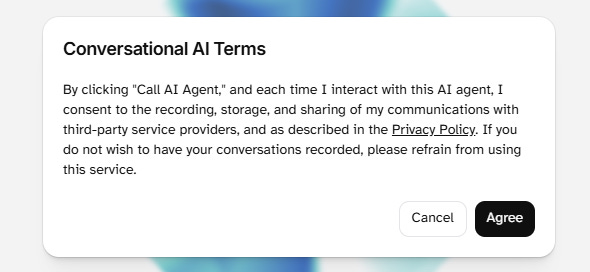

The next morning, I logged in to ElevenLabs to see the usage stats. I wondered if anyone had even tried it. That’s when I discovered that all of the conversations had been recorded!

I guess I should have known this… and the people using the agent should have been aware of this as well since it is clearly stated in the terms that appear when you initiate a conversation.

Listening to the recorded conversations between my voice clone and my friends & family unlocked some very uncomfortable thoughts. Suddenly it dawned on me that every conversation I have ever had with an AI chatbot (like ChatGPT) is recorded in some database somewhere.

All of the personal details, private reflections, insecurities, etc. captured in those moments alone, driving in my car and talking with ChatGPT - they are recorded in a place that I do not have access to. Can I delete them? (I doubt it.) Are they being used to train the next generation of models? (Almost certainly.) Yikes.

While listening to the conversations was fascinating, it also felt like an invasion of privacy. Honestly, it raised some very conflicted feelings…

It was also hilarious.

A call from my favorite brother

When my brother Ryan called to use the AI agent, he was prompted in the same way as everyone else, “Hi there. Which brother am I talk to?” But instead of simply telling the bot his name, he replied, “Your favorite brother.” What ensued was a delightful exchange.

You can listen to an edited version of the conversation here:

It was fascinating to see how each of my friends and family tested the AI agent in different ways. Some tried to actually use it as a brainstorming partners, asking real questions about the projects they were currently working on. Others tried to probe the depth of my agent’s knowledge about myself.

Did it know my kids names? (Yes, I put that in the system prompt). What about memories from old family trips? (Nope, no memories of that.)

Integrating the AI Agent into an Astronomy Lesson

After testing things out over several days, I figured it was time to close the loop and test the AI agent with my students.

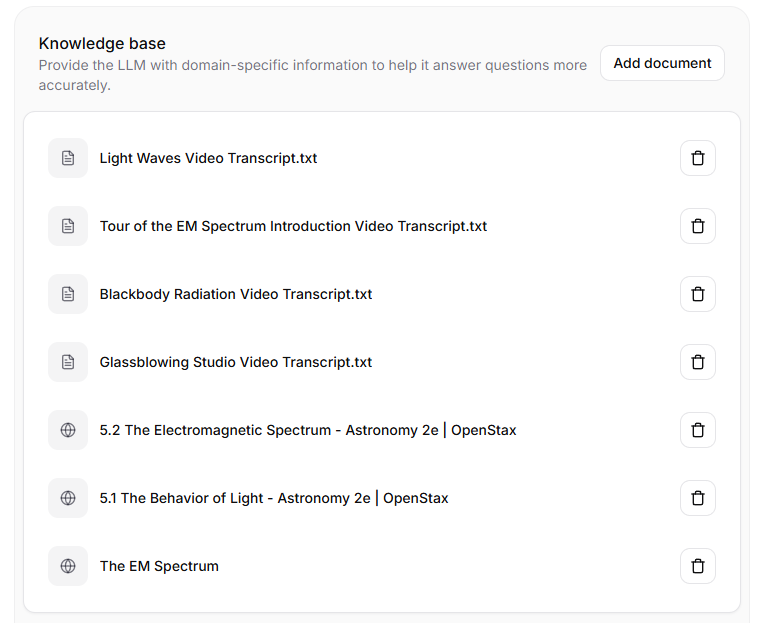

I created a new version of a Conversational AI Agent. This time, I filled the agent’s knowledge base with assigned readings and video transcripts from a single lesson of the course.

With this information in hand, the agent could answer specific questions from the lesson’s videos and textbook passages in additional to general questions about astronomy.

Sidebar: The “brain” of the agent comes from one of the leading LLMs (you can actually chose whether you want to use ChatGPT, Claude, or Gemini). So, the agent is very knowledgeable about all sorts of astronomy topics - even those beyond what I have put in the knowledge base.

I sent a message to all the students in one of my summer sections of astronomy, inviting them to test out the conversational chat bot. I was very deliberate about disclosing that the conversation will be recorded and that it is not a required activity.

After 36 hours, precisely zero students have tried the chatbot.

I must admit, this was quite a surprise to me. I thought that at least some of the students would be curious and want to give it a try. Perhaps there’s a lesson here about my students’ attitude toward this technology. I will need to ask them about that.

Well, since my students aren’t interested, I will share it here with you so that you can give it a go. Again, any conversation you have with the AI agent will be recorded, and I may listen to it. (Be careful what you say.)

Click here to view the astronomy lesson with my embedded AI agent.

(I can only guarantee that the agent in the lesson will continue working until mid-June 2025. After that, I don’t think I will keep paying for it.)

Test the limits of the conversational agent, and let me know what you discover.

A few more details about ElevenLabs tools…

Considering the incredible power of voice cloning and the quality of the various voices in their library, I think ElevenLabs is quite a deal for its current subscription price. That said, starting in late June, ElevenLabs will start charging users for the underlying model (the LLM brain) that is powering their conversational AI agents.

So, for the time being, my agent is benefitting from using the most sophisticated and expensive models available, but in a few weeks I would have to pay something like $0.008 per minute in order to continue to use the most powerful models (in addition to the usage costs associated with the ElevenLabs voices).

For a sense of scale, I calculated that it would cost approximately $112 to use ElevenLabs to narrate a 300-page book (roughly a 10-hour audiobook). That corresponds to a $11.20 per finished hour of audio. In my mind, this is a helpful comparison to the hourly rate that you might pay someone (or yourself) to do the same work.

I also want to mention that it is possible to turn off recordings for your conversational AI agents (or to have the recordings delete automatically after any number of days). So those with privacy concerns can continue to modify the settings to meet their needs.

Ok, I hope you found this interesting. If this post sparks any questions, I encourage you to reach out by email or comment on this post.