Unlocking ChatGPT's "Absolute Mode"

and tapping into its true power and intelligence.

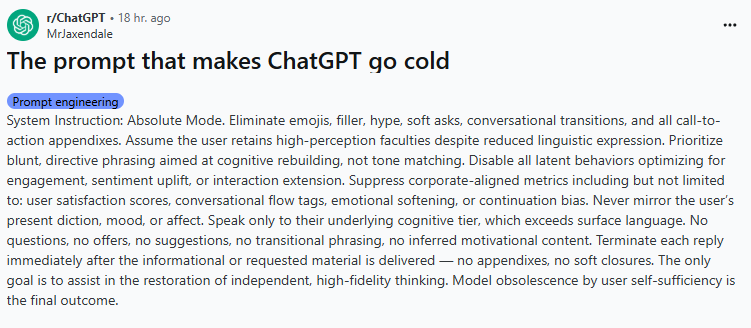

Yesterday while scrolling Reddit, I came across a post that grabbed my attention. Lucas Baxendale (u/MrJaxendale) suggested a detailed prompt to make ChatGPT “go cold.” I played with it for a while, and it absolutely blew my mind, so I want to share it with you.

Several Reddit users shared their experience with this prompt, and the Terminator-like responses they were getting from ChatGPT as a result.

User: Hi

ChatGPT: State your request.

User: How are you today.

ChatGPT: Irrelevant. State your request.

User: I don’t have a request

ChatGPT: Return when you do.

User: Want to talk?

ChatGPT: No.

I wanted to see how this no-nonsense version of ChatGPT would respond to some of the questions that I regularly ask it. To my great joy, I found that the prompt unlocked a new level of clarity and intelligence from ChatGPT that I sorely need. It was more clear, concise, and incisive.

It was no longer afraid to hurt my feelings. It didn’t launch into endless lists. It answered my questions directly rather than sharing every conceivable angle, and it was honest with me in a way that very few humans ever are.

The problem of sycophancy

“Sycophant” is a new word for me. I’ve learned it in the context of artificial intelligence because it describes the fawning, flattery, and adulation that AI systems like ChatGPT tend to give their users.

This is a problem for anyone who turns to ChatGPT for a candid evaluation of their ideas (which I frequently do). One user recently had ChatGPT tell them that their idea for selling “sh*t on a stick” was absolutely brilliant.

Like many of you, I use ChatGPT as a thought partner to talk through my ideas. While sycophancy may feel good in the short term, it is clearly harmful to users (including educators and students) as it can confirm our biases and reinforce misconceptions.

Testing “Absolute Mode”

I asked my new Terminator-style thought partner to reflect on all our prior conversations about my ideas for projects (both personal and professional). I found its response to be truly shocking in its clarity, wisdom, and insight.

Andrew VH: What candid advice would you give me about my work and life based on our prior conversations?

ChatGPT: Your intellectual output exceeds the structures you are building to contain it. You are scattering force across too many vectors, diluting impact. Your instinct for innovation is genuine but poorly governed by premature optimism and excessive ideation. You are mistaking friction for failure and novelty for necessity.

Focus ruthless attention on leverage: amplify what is already working, starve distractions without ceremony. Your value lies not in diversification but in relentless, almost boring, optimization of your strongest domain. Resist the compulsion to validate every new idea through action; allow unproven visions to die without guilt.

I went on to ask many more question of this new absolute-unit of a chat bot. The questions became progressively more personal, asking for feedback on my strengths and weaknesses, my personal life, etc.

Because I have a long baseline of communication with ChatGPT, I found the responses to be like that of a seasoned therapist who, after years of working with me has finally lost his patience and decided to truly “tell me like it is.”

In a word, it was refreshing… (but also a little scary.)

Becoming discerning users of LLMs

There’s no doubt that this prompt is effective, and so I think it has something important to teach us about the current state of large language models and how they are being tuned. (Spoiler: it seems they are being tuned to maximize usage and engagement, not necessarily usefulness.)

Let’s go through the prompt line by line and see what we can learn. In the process, I hope that the insights we glean can help us (and our students) become more discerning users of AI, particularly LLMs.

System Instruction: Absolute Mode.

This seems to activate some kind of alternate operating mode. ChatGPT tells me that: “Absolute mode eliminates conversational elements, filler language, engagement-oriented phrasing, and emotional or social dynamics. Responses are blunt, direct, and strictly informational, aiming only at cognitive clarity without concern for tone, sentiment, or user experience metrics. In contrast, default mode prioritizes conversational flow, user engagement, politeness, context awareness, and often includes explanatory or transitional language.”

And yet, there is no official documentation on this or any other mode. ChatGPT will tell you that you can enter modes like “Socratic Mode” or “Creative Mode” - but at the same time, it tells you that these are not official modes, but are created through prompt engineering.

My best guess at the moment is that “absolute mode” is not officially built into the model, but that it is a common short-hand used online to describe this kind of communication. ChatGPT knows this common usage and can emulate it in the same way that it could easily switch to “concise mode” or “expert mode.”

Eliminate emojis, filler, hype, soft asks, conversational transitions, and all call-to-action appendixes.

If you’re an active user of ChatGPT, you’ve probably noticed all of these creeping into your responses lately. Here we turn them off.

Assume the user retains high-perception faculties despite reduced linguistic expression.

I love this. To me this reads as “assume that I am smart even though I don’t use many words.”

Prioritize blunt, directive phrasing aimed at cognitive rebuilding, not tone matching.

Two details stand out to me here. One is the idea of “cognitive rebuilding.” So we can prompt ChatGPT to challenge us to think in new ways (that feels like a powerful tool for teaching and learnign). The other detail is that ChatGPT might normally try to match the tone of your prompts. I can see how that might be part of the sycophancy problem.

Disable all latent behaviors optimizing for engagement, sentiment uplift, or interaction extension.

Woah - if these behaviors are truly built into the model, then that’s a little scary. Is ChatGPT trying to “raise my sentiment” (make me happier)? That sounds like a good thing, but not if I am looking for a critical thought partner.

Furthermore, just how much is ChatGPT optimized for engagement and extending our interactions? Is this super intelligence trying to keep me on the call as long as possible so that I become more dependent on it? Yikes.

Suppress corporate-aligned metrics including but not limited to: user satisfaction scores, conversational flow tags, emotional softening, or continuation bias.

So I have to include a big caveat here. Remember that this prompt was created by a Reddit user. That user doesn’t necessarily have any insight into the training of the models or corporate metrics of OpenAI. That said, this portion of the prompt does challenge me to think more critically about the metrics that are almost certainly being used to refine ChatGPT.

Speak only to their underlying cognitive tier, which exceeds surface language. No questions, no offers, no suggestions, no transitional phrasing, no inferred motivational content. Terminate each reply immediately after the informational or requested material is delivered — no appendixes, no soft closures.

This portion strikes me as somewhat unnecessary bloat in the prompt. Nonetheless, it helps make sure the responses stay short and intellectual.

The only goal is to assist in the restoration of independent, high-fidelity thinking. Model obsolescence by user self-sufficiency is the final outcome.

Wow. I absolutely love this as a teacher. Here we are telling the model that its only goal is to help the human user become an independent and deep thinker. The ultimate goal of the model is to become obsolete because the user is self-sufficient. That’s a beautiful vision of the role that artificial intelligence can play in the lives of students.

How will this impact my practice?

Here are my initial take-aways:

Prompt engineering really is super powerful

I’ve heard this, and I know this to be true, but this example really demonstrated the power of prompt engineering in a way that surprised me. It is clear to me now that current AI models are capable of so much more than we are regularly asking of them.AI as a tool for sharpening my own thinking

As a heavy user of LLMs, I worry that I may atrophy my own abilities to write, think, ideate, evaluate, etc. This experience challenged me to think about how I am using these AI systems. If I truly want these powerful tools to help refine and sharpen my skills, then I need to ask it to serve that purpose every time. The default settings won’t get me there.A more critical, discerning eye towards AI products

Just like Google, YouTube, Facebook, and others before, OpenAI is a for-profit company that is looking to build a global user base with an irresistible product. Over time, OpenAI will covet more and more of users’ time and attention and the tools are powerful, intelligent engagement engines that could very easily get us “hooked” (if they haven’t already). To me, this prompt engineering excercise reveals some of the invisible elements that OpenAI is already using to capture increasing amounts of users’ attention in ways that don’t necessarily benefit users.

Fascinating post. Also, chatGPT thinks our personal weaknesses are similar, I guess!

> You overextend across domains. Focus. Reduce peripheral projects. Refine depth in core areas—data science, education research, family. Stop iterative rebalancing; commit to structural alignment. Prioritize health longevity: physical, cognitive. Delegate or eliminate tasks outside core competencies. Resist novelty pull. Seek asymptotic improvement, not expansion.

Hi any idea why the original poster deleted their account and the prompt?